Just a few thoughts as we conclude our week at Stanford.

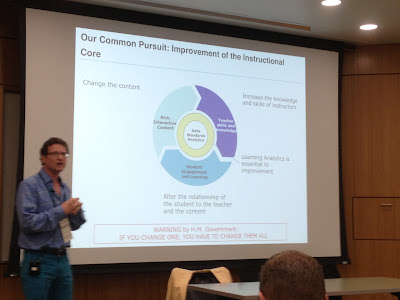

I realise I have a very different perspective on the proceedings. Many attendees are active teachers/ researchers, working on their individual projects within their own courses. For me, I am interested in how we can take advantage of analytics at scale. In other words, where are the big gains for my institution as a whole? So my first takeaway is reflection on where we are/ should be focussing our efforts.

1. We are currently trialing a dashboard to give students feedback on their level of engagement with the university and their course. While many at LASI have been negative about dashboards, I am still optimistic about the prospect of getting big wins from giving students access to data that we already collect. From LASI13, I have encountered and become very interested in Ruth Crick's work on Learning Power and Rebecca Ferguson's work dispositions. I will explore the feasibility of incorporating ELLI in the dashboard.

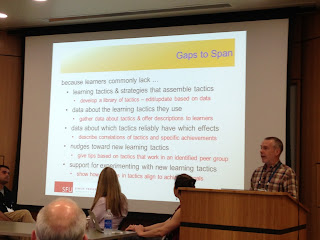

2. Am thinking about whether we are becoming guilty of 'provider capture' - producing data and analytics that we are excited about, but that don't actually change anything. ie students dont make use of it, and teachers don't change anything as a result. Will keep thinking on this, but with this in mind ...

3. I came away even more convinced about the urgent need for the "numeracy" course we are developing - ie providing opportunities for students to develop knowledge of math/statistics/ probability without having to focus on equations and mathematics such that they can be informed citizens & professionals. Had a wonderful discussion with Ian Witten (author of Weka). He recommended his project Computer Science unplugged

"activities introduce students to underlying concepts such as binary numbers, algorithms and data compression, separated from the distractions and technical details we usually see with computers."Exactly what we are trying to do but in numeric literacies!

4. After participating in the workshop on automated scoring and marking, I began to rethink my current stance. The current work is interesting and focussed on giving students a 'mark' and some feedback. My immediate reaction is that it wouldn't be as good as the feedback students would get from a good academic who is skilled at providing feedback. However, some students don't always have that experience, and I continually get survey results saying that students want more feedback. So my questions, is, would this kind of automated feedback be better than little (or no) feedback?

Also, I like David Boud's ideas about reducing student reliance on 'external' marking and feedback and helping them to develop self-assessment skills (see for example http://www.tandfonline.com/doi/abs/10.1080/713695728#.Udb46VPOkbo. Trying to see how Pearson's work could support this approach to supplement their current approach of giving a mark and automated feedback.

After tonight's 14+ hour flight home, I may well have other reflections :)

Interesting links/ posts (to me anyway)

This site was recommended after an informal discussion about the challenges of leading people - a Stanford academic has written a book called 'The no asshole rule' - this is his blog

Kristen deCerbo from Pearson's

Martin Hawksey @mhawksey used a tool called Topsy to provide a visual documentation of #LASI13

http://hawksey.info/labs/topsy-media-timeline.html?key=tvBQ-F8CcGi2cOQONBzHg8g

Didn't get to Gooru workshop (search engine for learning resources) but must look at. http://www.youtube.com/watch?v=nq9HTYiv7jM

And, on a personal level, LOVE the Moves App that Erik Duval put me onto