Tuesday 2 July

Session 1

Market Dynamics to Accelerate the Educational Data Science Field:

Making a market for learning analytics

Stephen Coller (Bill and Melinda Gates Foundation) @eduforker

His interest in this field began with a conversation with Roy Pea about the systems architecture required for learner ecosystems to provide personalised learning. Gates foundation sees great potential for this field to improve experience of learning.

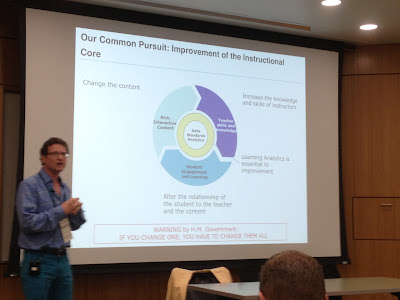

To improve the instructional core you need to:

- change content

- increase the knowledge and skills of instructors

- alter the relationship of the student to the teacher and the content

How might market forces contribute? Showed a diagram showing four components in a circle students -> product markets -> institutions -> resource markets -> students

Steps

1. A user-experience that gets heads nodding and hearts racing

Students "I am paying a s***-load. What are my gains? Where am I heading?"

2. A (credentialed) product of Service to meet them

Product markets "tasks predict performance" -- mentioned Minerva model (high end model - poses challenges, transdisciplinary teams to work on them). Think this now high-end model may trickle down to community colleges.

3. Demand aggregated at sufficient scale to produce (data and dollar) resources

Institutions "How do I evaluate student outcomes? How do I assess and improve instruction?"

4. Responsive brokerage of data and dollars to meet and fuel follow-on demand

Resource markets "What is good content? What is an effective task?"

What role should foundations play?

(better image via Simon Buckingham-Schrum at pic.twitter.com/Zl0qEUdHWN)

horizontal axis - demand nascent to emerging to established

vertical axis - economic incentive for suppliers low to high

(blue) cell with established demand and high incentive

Strengthem incentive for performance and innovation: common performance and technical standards.

Says LA is between nascent and emerging on low end of incentives

Closing thoughts

- what are the quickest wins in the user experience?

- who is the principal customer for this service? States? Institutions? Households?

- Is this an embedded or stand-alone product? MOOCS could go 1 of 2 ways - as a new version of correspondence courses OR could be weave the institutional experience with what goes on outside

- How might the Feds help?

- what are the barriers to innovation holding the market back?

- How can communities like LASI and platforms like Globus add up to a commons? What steps remain before we have an enabling environment?

- use of tools from private providers is a 'black box' solution - not open in any way

- are we equipping learners for the complexity of life?

Hearing good things about the Gooru workshop yesterday - I went to Google Apps workshop - well live-blogged by Doug Clow

Yes! can't agree more Kim Arnold :)

Session 2

Data mining and intelligent tutors

Ryan Baker & Ken Koedinger CMU

Learnlab - Pittsburgh Science of Learning Center

Ken says he will finish in 16 minutes :) then Ryan will speak.

Why LA is important?

most of what we know we are not aware of, thus ed design is flawed. Therefore need data-driven models of learners. "cognitive models" can be automatically discovered.

example - you might say you know English. But do you know what you know?

Quotes Richard Clark with picture of iceberg

Cognitive tutors: interactive support for learning by doing

Ken more interested in what is hard to learn

Using model design -> deploy -> data -> discover ->

Using control-treatment group approach :(

Ryan Baker

Modeling engagement in ASSISTments - developed engagement assessments - perspective on engagement in literature see Fredericks et al, 2004

look at affective and behavioural engagement - developed automated engagement detectors

studied

off-task behaviour -

gaming the system eg systematic guessing, hint abuse

affect including boredom, frustration, confusion

Field Observation method

protocol designed to reduce disruption to student

- some features: observe with peripheral vision or side glances, hover over student not being observed, 20 second roundrobin ...

model assessment

cross-validation for generalisability

model goodness - looked at outcomes for

- boredom

- frustration

- engaged concentration

- confusion

- off-task

- gaming

Engagement and learning (ref?, 2013)

applied affect detectors to middle school students to predict whether student would attend college 6 years later? 58% college attendance

showed results - but so fast, couldnt get it down. Doug Clow did a better job of this :)

Models predict end-of-year tests and college attendance goal: use them to make a difference in these constructs

Great comment on twitter:

Closing comment - we need to balance "The hare of intuitive design and the tortoise of cumulative science"

see also

D'Mello et al. (2008) Automatic Detection of Learner’s Affect from Conversational Cues http://ow.ly/mAViC

Critique: Interesting ideas but has reinforced my existing views on cognitive science! Learning is so complex we can't hold everything constant and vary one thing as we can in a laboratory. Therefore I can't see much point in the control-treatment group approaches to proving something works in learning. It also suffers from the old 'correlation does not equal causality' misconception as Kristen has pointed out above.

Concerned that LA is not making use of some of the more contemporary approaches to understanding and designing learning such as those from neuroscience, and phenomenography. Will try to write something more about this

Session 3

Plenary: Learning Analytics in Industry: Needs and Opportunities

Chair: George Siemens

Speaker: Maria Andersen (Instructure), Alfred Essa (Desire2Learn), Wayne C Grant (Intel), John Behrens (Pearson)

George asking for perspectives

John starts with 4 major influence on data analysis:

- formal theories of statistics

- accelerated developments in computers and display devices

- the challenge in many fields of more and ever larger bodies of data

- the emphasis on quantification in an ever wider variety of disciplines

refers to common issues between higher ed and K-12

fundamental flow about how LA happens

world -> symbol -> analysis -> interpretation -> communication

pic from Harris, Murphy & Vaisman (2013) Analyzing the analysers: an introspective survey of data scientists and their work

Alf from Desire2Learn

Started out by quoting my question from yesterday on what are the really big questions?

Which results are promising and are they reproduceable?

Eric Mazur's work on the flipped classroom - lecture mode in Physics in completely ineffective.can close gender gap with interactive teaching (at least in Physics) potentially generalisable in STEM anyway?

refs:

http://www.physics.indiana.edu/~hake/

http://ajp.aapt.org/resource/1/ajpias/v74/i2/p118_s1

carl weinman UBC - experiment - 2 groups - lecture vs interactive learning. experimental group scored twice as well as traditional. astounding result. who is reproducing that experiment?

Wayne from Intel

principles - designing whole solutions to deliver personalised, active learning experiences.

4 major pillars of activity -

products, OEM products, Intel classmates program

software,

locally relevant content, Pearson, McGraw Hill

implementation support, Intel teach program

Mentions Gartner group's hype cycle - Gartner puts LA at the top of the peak of inflated expectations. Audience puts it as rising to that. (See current version of hype cycle and technologies)

Says we have to be careful otherwise it will get killed

like

Maria Andersen from Instructure

Is from a much smaller company hence different perspective - what we need from researchers

havent changed habits of teachers despite all the data we have been collecting and analytics. Frustrating that we create platforms for teachers, but they dont look at it. Looking for insights into how that data should be 'surfaced'

Industry want access to research and she hopes researchers will continue to publish pre-prints and, papers should include a "practical application" section to help industry practitioners.

No comments:

Post a Comment